An Adaptor Strategy for Enterprise Risk Management

By M. Bruce Beck, D. Ingram and M. Thompson

Risk Management, April 2022

Part I

The Nuts and Bolts: Cascade Feedback Control

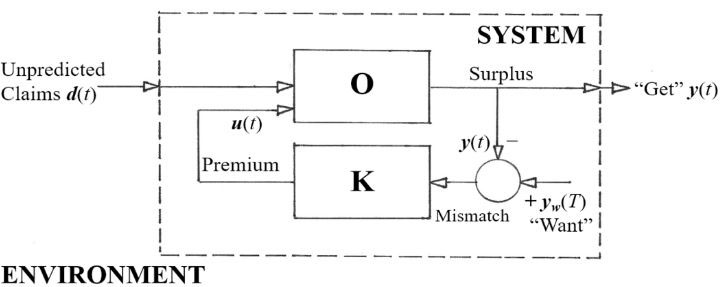

Studying the data on market risks, asset behaviors, profit-loss, and so on; making a recommendation; and anticipating new data for refining and fine-tuning recommendations for the next decision period—this is the good actuarial life. To a control engineer this is a feedback loop in a “block diagram.” Figure 1, in fact.

Sitting in the Driver’s Seat

Claims enter the dashed box of the “System” (the insurer) from the left in Figure 1—from the source peril realm in the business’s risk environment. The actuary gathers information on their impact on the “Surplus” performance of the insurer, heading off to the right; and detects when there is a “Mismatch” between the “Wants” of the firm and actual experience, its “Gets.” If there is a mismatch, the actuarial magic occurs inside the “K” box. It produces a recommendation of changes to the premium charged and feeds this back to the left and around the loop to become an input to company operations (“O” box).

Figure 1

The feedback loop in a control engineering block diagram. The system illustrated is that of making premium-setting decisions for a with-profits insurance scheme.

Actuarial science first encountered such control engineering as long ago as around the turn of 1970/1, when actuarial professional Bernard Benjamin met control engineer Les Balzer (by chance) at the Computer Control Laboratory in the University of Cambridge. Their goal was to stabilize the financial performance of an insurer’s profit-sharing scheme in the face of unpredicted claims being submitted.[1] They did so by the simple graphical manipulation of a block diagram such as Figure 1. How times have changed.

While Figure 1 may not be familiar to most actuaries, the concepts in it are their bread and butter. The trouble is, who exactly occupies the driving seat of the controller K box?

But First and Foremost: Let Not a Fundamental Principle of Systems Thinking Pass by Without Notice

Our world has just been severed in Figure 1 (as it will be again in Figure 3 later): To extract the “System” from all that remains, this being its enfolding “Environment.” Yet the latter is every bit as much a system as the former.

It is just that we choose to distinguish the two so that we may home in on what interests us primarily—the “System” (in Figure 1), that is—hence to analyze this “System” in detail. Yet we fully acknowledge that there are things out there, in the surrounding “Environment” of Figure 1, that are material to our analysis. They, however, should not be the focus of our analysis. We choose not to analyze the “Environment” in anything like the same detail as the “System.”

We may say that the systems thinking here is invested in drawing the boundary between “System” and “Environment.” This is not trivial. And doubtless it will not be best crafted first time round. Systems analysis is then all the subsequent analyzing of the configuration and behavior of the “System,” conditional upon some carefully selected, possibly gross simplifying assumptions about its “Environment.”

But who, as we have asked, gets to sit in the driving seat of the “System” of Figure 1?

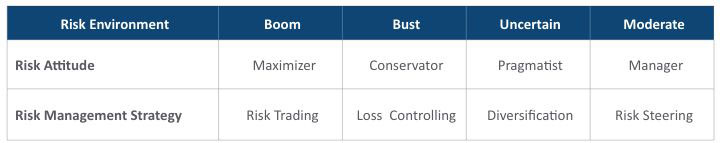

Just the One Seat, but Four Candidates Vying to Occupy it

We have written previously of there being four business strategies, each aligned with four stances—viewpoints, or attitudes—shaped by the experience of four different economic risk environments.[2] We have identified four “seasons” of risk that make up the familiar business cycle: The four “risk environments” of Figure 2. Each season in Figure 2 is associated with a risk attitude and a risk strategy. And a season of risk may often last for several years. Sometimes, however, we experience dislocations and shifts among the seasons within a very compressed timespan, as during the pandemic.

Figure 2

The Four Seasons and Strategies of Rational Adaptability in ERM

Whatever the speed of such significant, qualitative changes in a business’s risk environment, we have argued in favor of switching the accompanying risk attitude and control strategy in as timely and orderly a manner as possible. We called this “Rational Adaptability for ERM.”

The Bigger Issue: Rational Adaptability

For as long as the season of risk endures, unchanging, the basic feedback control process pictured in Figure 1 will be fine, provided the right autopilot—“right” for the prevailing season, that is—is occupying the driving seat of the decision-making K box. This encounters significant difficulties, however, when confronted with situations in which the company’s risk environment changes qualitatively, as it shifts from one season of risk to another.

How do you determine how to get one autopilot out of the control box K in Figure 1 and substitute it by another, given the three alternatives of Figure 2? How, in other words, should one decision-making strategy be swapped out of the company’s driving seat and replaced by the decision strategy that is right for the new season?

By way of a first response, we have previously proposed a process of Rational Adaptability for reacting to the changing risk environments. Now we have a name for the practitioner of that process: The adaptor.[3] And the adaptor sets its goal to pivot the organization in response to the environmental change to best address the new environment by changing the risk strategy of Figure 2.

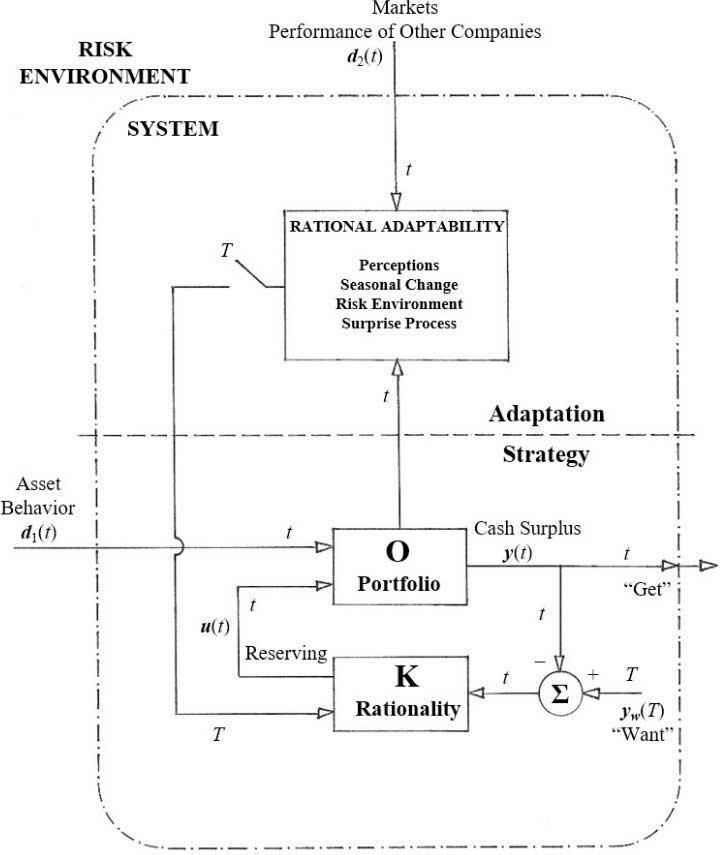

One way to accomplish this is known as a cascade control scheme. Figure 3, which has been set up for reserving decisions (in the K box) in relation to the company’s portfolio of assets and liabilities in the O box, is an example of a cascade control scheme.

Strategy

To begin, think of the lower half of the cascade control system in Figure 3: its “Strategy” segment, as a sort of “engine room” of the insurance company. In this instance, we have illustrated it with the idea of the reserving decision: Of what to put into assets, and what to take out of them, in the presence of ever-changing disturbances to the behavior of the assets. These disturbances, we note, lie quite outside the insurance company. They have their sources in the surrounding “Risk Environment” of the “System” (in Figure 3), and typically their variations over time would be tracked by indexes such as the S&P 500. Accordingly, in the “Strategy” segment, we assume we have four possible versions of the way in which feedback control can be applied to choosing the reserving decision. Hence the company gets the cash surplus it wants, irrespective of the uncertainty associated with asset behavior in the marketplace.

We suppose we can have any one of the four different decision-making strategies of Figure 2 placed in the K box in the lower segment of the company in Figure 3, i.e., any one of four differently structured control rules, or four distinct autopilots. In any given season of risk (and at this engine-room level) we have either the maximizer sitting in the driving seat, or the manager, or the conservator, or the pragmatist. For as long as the strategy in the driving seat is correct (and so well-tailored) for the current season of risk—in other words the pattern of risk associated with asset behavior on the left-hand side of Figure 3—then the company is basically getting what it wants, and doing perfectly well in that respect.

Figure 3

Rational Adaptation as a Cascade Control Scheme for Reserving Decisions[4]

The trouble arises, of course, when one has one of the strategies in the controller block (maximizer, manager, conservator, or pragmatist), but the season of risk has changed. What to do? For this is a qualitative change: A serious difference, even a dislocation, in the pattern and the basic statistical properties of the risks to which the company is exposed. Such circumstances bring us to the heart of what Rational Adaptability (in ERM) looks like in the frame of control engineering.

Adaptation

Look up then to the “Adaptation” segment in the upper half of the cascade control scheme of Figure 3. In fact, follow the arrow rising from the “Strategy” segment below. Symbolically, it conveys operational information about all the goings-on in that lower segment. The arrow reaches the Rational Adaptability block, as does a stream of information about other elements of the company’s “Risk Environment” (such as competitor behavior in the company’s product markets). To the left of the Rational Adaptability block, on its outgoing decision-information flow is the control-engineering depiction of a switch. It is shown as being open at the moment.

Here is the next crucial step in our argument.

Place our idea of the adaptor in the Rational Adaptor block. Suppose the adaptor detects—somehow—that the season of risk no longer comports with the company’s strategy, being driven along, as it is, in the engine room of the controller block below (in the “Strategy” segment of Figure 3). Suppose further that—again, somehow—the adaptor has determined what the new season of risk is, hence what should be the nature of the new autopilot (maximizer, manager, conservator, or pragmatist) sitting in the driving seat of that K box of “Strategy.”

At this point the adaptor closes the switch and the signal passes down to the engine room: To swap out the maximizer for the pragmatist, say (or the conservator for the manager, or whatever), given that the season of risk has changed from boom to uncertain (or from bust to moderate, or whatever).

We need to inspect Figure 3 more closely.

Quick Time and Slow Time

Looking at the detail of Figure 3, nearly all of the arrows (they denote flows of risk, information, or money) are labeled with a lowercase t, to indicate, and emphasize, micro time, or quick time, i.e., things that vary (relatively) rapidly with time, and things that vary in the short term.

Only two of the arrows—the all-important switching signal from the upper RA block and, less important for present purposes, the “Want” arrow—are labeled with an uppercase T for macro time, i.e., slow time, or seasonal time (or change over the longer term). Therefore, we need to make a distinction here between what happens from season to season, upper-case T, and what happens with regard to routine day-to-day variations of operations, which is lower-case t.[5] Significantly, the switch next to the Rational Adaptation block of the cascade control scheme of Figure 3 is closed only occasionally, albeit in an “instant.” While the switching itself is idealized as happening in a moment (“just like that”), such occasions are relatively few and far between. They are marked, then, as points in macro time T.

Well, that would be the customary idea, at least in principle. But now we have had the pandemic, when switch-throwing has been proceeding in near real time, with, therefore, T almost merging with t. Very sizeable chops, changes, and shifts in strategy-policy have been implemented over a compressed span of time, only then to be re-implemented.

Yet even for the more familiar decision making of businesses, there’s a problem! For what, of course, is to be done about those two (very big) “somehows” just mentioned? What is to be done about:

- Diagnosing the nature of the risk environment surrounding the company, i.e., determining the season of risk?

- Sensing and detecting an imminent or actual transition in that risk environment?

- And diagnosing which strategy should be deployed into the driving seat to cope with the new, post-transition season of risk?

First Response: Diagnosing Surprise As a “Mega-Mismatch”

In our earlier work on Rational Adaptation for ERM (and in the absence of anything like a Figure 3) the adaptor’s switch was pulled in accordance with a “Surprise” typology. And “Surprise,” we should point out, has everything to do with organizational learning in this context.[6]

It is helpful to start by drawing a parallel. What the “Mismatch” of basic feedback control is to routine operating “Strategy” in Figure 1, so the “Surprise” of cascade control is to the switching function of Rational Adaptability in the upper “Adaptation” segment of Figure 3.

But there the similarity ceases. Simply put, we should think of “Surprise” as a “Mega-Mismatch.” As a whole, it is a basic belief-challenging dissonance between the way that the auto-pilot in the driving seat of the K box in the “Strategy” segment of Figure 3 holds the world to be and the way that the world actually is. At the more mundane level of routine technicalities—down in the engine room of the lower segment of Figure 3 (and focusing on the so-called comparator junction, the small circle with a summation sign Σ in it)—it is a “Mismatch” between “Gets” and “Wants” that persists. Yet that technical dissonance is argued away by the occupant of the company driving seat. Until, in truth persisting, it grows … and grows … and grows … until … “Surprise”!

According to this, our first (and earlier) way of handling Rational Adaptation, such “Surprise” is the very nub of the matter: It is where the totally expected “constructed world” of any of the four autopilots in the driving seat of the “Strategy” K box is confronted with the “one world of reality” we all inhabit—irrespective of our core beliefs about the risks out there. “Surprise,” when (if) eventually it dawns—when it dawns upon the given autopilot that the company is flying through atmospheric conditions quite unlike what it has presumed will forever prevail (and unquestioned so, like an article of faith)—is a “Mega” version of the everyday “Mismatch” of the feedback control in Figure 1. What is more, this “Mega-Mismatch” cannot be rectified by mere everyday fine-tuning.

The surprise has to be shocking enough to provoke learning; to shake the foundational convictions of each of the four risk attitudes in Figure 2. And someone needs to throw the switch beside the Rational Adaptation block up above in Figure 3.

Empirical Experiences of Surprise

The “Surprise” typology is one means of determining how exactly to pull the switch. More prosaically, it is a typology of the differing pieces of empirical (non-quantitative) evidence of what is experienced by each of the four “Strategy” proponents when (eventually) it is surprised to realize that the season of risk is not at all that for which it is suited. Each of the four strategies can suffer three such surprises. For instance, there will be three occasions when the manager—who is so well attuned to the moderate season of risk—will need to be surprised and utterly shaken out of the manager’s way of making decisions. Such occasions will occur when at last it dawns upon this manager that a season of risk that is boom, bust, or uncertain prevails “out there.”

Figure 2 shows the four strategies, each tailored to one of the four risk environments. At any point in time, one of the four strategies will be active, seated in the lower level of the K box of Figure 3. Left unattended, each autopilot will eventually cease to be aligned with the inexorably evolving, eventually shifting, season of risk. The actual business environment will have transitioned.

Eventually (again), “Surprise” will dawn. And each of the four risk attitudes may be asked for their experiences of company performance vis à vis their perceptions of reality. Among the 12 classes of “Surprise” will be summary experiences such as:

- “Expected windfalls from time to time don’t happen—only loss after loss after loss,” as the pragmatist would protest when confronted with bust (as opposed to an uncertain risk environment).

- “Total collapse,” explodes the maximizer after bust has happened.

- “Unexpected runs of good and/or bad luck have happened,” muses the pragmatist when surprised by the manager’s season of moderate.

- “Others prosper without exercising any caution,” complains the conservator when the season risk is either moderate or boom.

And so on.

Given that there is only ever one world of reality, but four constructed worlds, reports of empirical experiences such as the above will always embrace one that is correct and three that are false. Indeed, the not-to-be-underestimated richness of the moans, groans, and jealous complaints derives from the plurality of the four voices in the company—provided their viability has been preserved and nurtured by the company (something we shall address in Part II of this article).

But which of the four voices gets to get it truly right, and correctly seizes the driving seat of company strategy in the K box? For each will insist it has the right answer, including the one who has actually got it right, and is (presumably) smiling, perhaps rather smugly.[7]

For instance, after the financial crisis of 2007, what we call a risk season of uncertain prevailed for several years. It was so well suited to the risk strategy of the pragmatist in Figure 2. Yet some companies stuck with the conservator strategy, convinced that any period of uncertainty was but a mere momentary pause (in the bust environment of 2008/9) before the bust, surely, would again prevail.

As far as we know, however, “Surprise” typologies are not the stuff of which feedback control loops are made. So how might the adaptor go about determining a shift in the season of risk or a qualitative shift in apparent company strategy and success? For above all, we should like the adaptor to arrive at a definitive diagnosis, hence the throwing of the switch in Figure 3. Sooner rather than later. And faster surely than the seeming eternity it may take for any of the four autopilots to be shocked into realizing just how inappropriate their risk strategy is—and get out of the driving seat! Because for as long as the season of risk is what the “Strategy” group in the driving seat always believes is the season of risk, then they will insist there is no need to change anything.

To this end—of expediting the definitive diagnosis—we grant the adaptor some hypothetical models with which to work.

Second Response: Control Engineering and Its Models

In our second response, therefore, we approach diagnosis of the “Surprise” typology and the adaptor’s switching function through the surrogate of models, but models with a special control-engineering ingredient.

Instead of soliciting the views of the vying and perhaps even surprised autopilots, we assume various streams of formal (time-series) data become available to the adaptor and accumulate day-by-day:

- Data streams, as one would expect, associated with the shifting “Risk Environment” in Figure 3, such as asset values tracked by various indexes (the d1 impinging on “Strategy”) and the behaviors of competitors and their products in the marketplace (the d2); and

- Data streams from within the “System” in Figure 3, on the pattern of the company’s operational decision making, as issued from the controller K box (the u in Figure 3), and data tracking its business performance (the y).

Given these time-series data, we presume various univariate or input-output econometric-like models may be employed for analysis of the shifting nature of the company’s risk environment and of its performance in the face of that environment—not least in respect of the nature of its decision making (in the K box of Figure 3). These are the discrete-time models of control engineering (just as used by Balzer and Benjamin). Each such model may be reconciled continuously with the accumulating operational data. Each model contains a set of parameters, its coefficients. So here is the special control-engineering thing: We make the model parameters time-varying.

The Special Ingredient: Time-varying Model Parameters

Our models are regarded as the simplest of models, hence wrong. But they can be enabled to be right in the moment of the decision making (in quick time t) by being populated with parameters presumed to vary in slow time T.

These parameters, moreover, will be deemed sufficiently important in their own right to merit their own express label α. They are not mere handmaidens to the workings of the more important variables: The d(t), u(t), and the y(t). They have to do with the structure of things: Of what is related to what (among the d(t), u(t), and the y(t)), in what way, and by what causal mechanism. In other words, they have to do with the make-up, or the design, of a system. They are the time-varying parameters denoted α(T).

Just the label itself, the α(T), will assume a dominant stature in Part II of this article. It is shorthand for the parameter space of the system.

Toward Part II of This Article: Adaptor “Exceptionalism”

There is a distinctive air of exceptionalism about the adaptor: Of otherness; of something quite apart. This is entirely intentional. Our adaptor is to be fashioned upon the archetype of the hermit: The fifth rationality of the Cultural Theory of social anthropology, which is the theory that has driven the shaping of Rational Adaptability for ERM hitherto—but employing only the basic four rationalities.

Thus, whatever it is each of the other four attitudes (rationalities) of Figure 2 takes heed of (in its own distinctively different way)—from among the d(t), the u(t), and the y(t), that is—we shall charge our adaptor with focusing its attention instead on the α(T). In doing so, we are also assigning an elevated status to the role of data about the α(T) in the “Surprise”-complementing diagnostic work of the adaptor—with significance above and beyond that of the d(t), the u(t), and the y(t).

But there is more to the parameter space of α(T)—much more, and more to the adaptor’s exceptionalism—than the diagnostic use of the α(T) in supporting the adaptor’s switching function. This extra has to do with what we are calling the nurturing function of the adaptor. It amounts to a grander consideration: Of how to nurture the make-up—the fabric or the structure of the system—such that it may perform with resilience. It will entail investing (continuously) in the human, financial, and material resources of the company. Its presentation and discussion occupy Part II of this article.

We shall see there that the bare essentials of this systemic property of resilience can be deduced from how natural ecosystems are organized. Within which organizational makeup will reside a company’s capacity to achieve the following two tasks: First, to navigate with aplomb through all the seasons of risk and beyond into the yet more-distant future; and, second, to elevate the prospects of the company fruitfully restructuring its makeup, not least under conditions of what economist Schumpeter would describe as “creative destruction.”

In other words, the adaptor’s nurturing of the makeup of the company should enable the company to exhibit not only the familiar “bounce back” of resilience, but also that of “bounce forward,” as some economists (again) have put it. The best practical illustration to hand of something akin to the adaptor’s nurturing is the oversight of human resources distributed across the start-up companies housed on the campus of an innovation ecosystem in Linz, Austria. This case study will be presented in Part II.

A Link by Which to Clamber Into the Parameter Space of α(T)

Getting into the “mindset” of the parameter space of α(T) is not easy. Nor, doubtless, will such a strange thought be familiar to many. It is vital, however, to getting to the bottom of what resilience might amount to. Here is a link that takes you to a supplementary document providing significantly more in-depth discussion and elaboration of the systems thinking logically connecting Parts I and II of this article.

Yet reading and digesting the link is not a pe-requisite for reading Part II, when this article is resumed in the next issue of this newsletter. But then again, every opportunity is taken in the link to leaven the clambering into a parameter space. Wherever possible, our discussion in there is tied to more familiar subjects, especially economics, but also ecology (as the origin of resilience) and social anthropology (as the theoretical basis of RA for ERM). There will also, however, be some more control engineering. In that sense, the link is an exercise in cross-disciplinary “Systems Thinking”—just as are this Part I and the coming Part II of our article.

So, what, in short, is there for us in the link?

It departs from the control engineering of guided missiles and closes with a return to the premium setting problem of Benjamin and Balzer, with which we began this Part I of our article. In sum, one can end up with something really quite sophisticated—of learning and controlling at one and the same time—and worthy indeed of being called “super magic” in the feedback loop of Figure 1 (or equivalently Figure 3). Control engineers would refer to it as dual adaptive control. It originates inter alia in the guidance schemes of guided missiles.

En route, a firm distinction is drawn between the systemic properties of stability in the short term and resilience over the long term. It is this that brings us to the heart of the parameter space of α(T)—even the visual imagery of an animated stop-motion picture.

We pass over the following as well. If the α(T) appear specifically in a model of the decision-making strategy in the controller K box of Figure 1, they enable a single structure of the decision rule to cycle (over time T) through the four archetypal “poles” of maximizer, manager, conservator, and pragmatist (from Figure 2). The fourfold variety of decision making in quick time t is approximated by the surrogate of a single rule of decision making whose structure (its parameters α) varies over slow time T, hence variability of the decision strategy.

“Modeling the Variety of Decision Making”—as opposed to modelling the variability of decision making—is notably the title of our recent (2021) SOA Research Report, upon which this article is based. It has to be said, however, that both Part II and the link advance measurably beyond the contents of that research report.

Statements of fact and opinions expressed herein are those of the individual authors and are not necessarily those of the Society of Actuaries, the newsletter editors, or the respective authors’ employers.

M. Bruce Beck, Ph.D., is scholar in residence, FASresearch, Vienna, and guest senior research scholar at the International Institute for Applied Systems Analysis (IIASA). He can be reached at mbrucebeck@gmail.com.

David Ingram, FSA, CERA, FRM, PRM, is part-time consultant at Actuarial Risk Management and an independent researcher, writer and speaker regarding ERM and insurers. He can be reached at dingramerm@gmail.com.

Michael Thompson, Ph.D., is emeritus scholar at the International Institute for Applied Systems Analysis (IIASA). He can be reached at michaelthompsonbath@gmail.com.