Who (or What) To Trust? Going Back to Basic Principles

By Mary Pat Campbell

The Stepping Stone, July 2024

When I was a kid, my mom used to drop me off at the local library on the weekend. I’d sit in the nonfiction section for a few hours, perusing the books. I especially loved camping out near the beginning of the Dewey Decimal system, because that’s where the “weird stuff” was.

- 130 Parapsychology and occultism[1]

- 130 Parapsychology and occultism

- 131 Parapsychological and occult methods for achieving well-being, happiness, success

- 132 No longer used — formerly "Mental derangements"

- 133 Specific topics in parapsychology and occultism

- 134 No longer used — formerly "Mesmerism and Clairvoyance"

- 135 Dreams and mysteries

- 136 No longer used — formerly "Mental characteristics"

- 137 Divinatory graphology

- 138 Physiognomy

- 139 Phrenology

I was a teenage Mulder,[2] and though I didn’t have the “I WANT TO BELIEVE” poster on my wall, I may as well have. I sucked in the stories of ESP, telekinesis, and more.

In particular, I got enamored with the stories of the research of J.B. Rhine, who began his career at Duke University and who had started an independent research lab in retirement. He was known for promoting research in parapsychology, particularly in clairvoyance, with patterned cards. They were looking for people who had “second sight.” They had statistics! It worked!

Ultimately, in combing through the nonfiction section of the local library, I also ran into two other books, not just the pro-ESP ones: Fads and Fallacies in the Name of Science, by Martin Gardner, and Flim-Flam! By James Randi. While Martin Gardner was best-known for writing on mathematical topics for Scientific American magazine, he also had a talent for amateur magic. James Randi was no amateur at magic. This is relevant to the matter at hand, as magicians are deception experts. In the research of ESP, there was deception galore, whether on purpose by researchers or research subjects.

Reading Randi’s and Gardner’s books, I learned of the many problems of “evidence” for all the paranormal phenomena I had wanted to believe in. The main problem was that it was easy to see how the results could be generated through non-ESP means. It was clear once a skeptical magician showed how research subjects could get information about the cards they were supposed to view via “second sight.” In other cases, it turned out that research data had been falsified to get the desired results.

In no cases were they able to get anything other than random results when well-controlled experiments were tried, when someone who knows how tricks can be done designed the controls.

This is similar to earlier debunking of spiritualists by Harry Houdini, such as those taking advantage of the grieving Arthur Conan Doyle and others after World War I. Houdini knew the tricks that could be deployed, and once so many people were taking advantage of the bereaved, he started exposing various prominent mediums and spiritualists. As reported in National Geographic:

In 1922, Scientific American threw down the gauntlet. Any medium demonstrating paranormal abilities under rigorous examination could win a $2,500 prize. Among the mediums vying for the prize in 1924 was Boston socialite Mina Crandon.

Crandon claimed to channel the spirit of her deceased brother Walter, whose crass and aggressive demeanor contrasted with charming Crandon’s. Tables seemed to levitate during her séances, and a bell in a box rang, even when no one touched it. Her apparent abilities won admirers, including researchers who believed she was the real deal.

Houdini remained skeptical. When he participated in a sitting as part of the Scientific American examination, Houdini claimed to have cracked the mystery of Crandon’s ringing bell. She simply rang it with her feet, he said, after he felt them brush against his legs. When all was said and done, Houdini found plenty of evidence for tricks—and none for ghostly abilities. Scientific American ultimately agreed, leaving Crandon empty-handed.[3]

In many cases of the trickery in practitioners of paranormal, one should not have looked to the scientists to investigate, but those who are used to trickery—the magicians.

You may wonder why I bring up any of my childhood credulity, and the relevance to actuarial work.

I’m a Credulous Fool…Maybe you are, too

Let me revisit two earlier pieces I’ve written for The Stepping Stone.

First, in 2013, I wrote the following in “Everybody Cheats, at Least Just a Little Bit: A Review of The (Honest) Truth About Dishonesty, by Dan Ariely”:

Like many forms (especially tax), one generally fills out an insurance application, and then, at the end, signs a statement attesting that all the information above is truthful. Ariely wanted to check the effect of having people sign such a statement before filling out an auto insurance application. Specifically, he looked at the part of the self-reporting miles driven per year (as more miles driven results in higher premiums, usually). The result? Those with the form with the attestation at the top reported, on average, 2400 fewer miles driven than those who had the attestation in the standard place: the bottom. This was about a 9 percent reduction, which reflects the marginal aspect of “normal” cheating.

In a 2021 follow-up article, “Distrust and Verify,” I wrote “It turns out that an insurance example I used to illustrate the theme of the book was based on fraudulent research. Maybe I should have taken a hint from the title of the book.”

The experiment I had described in the 2013 book review had data altered to get the desired results. The alterations, hilariously, were discovered due to font differences in Microsoft Excel, as well as other oddities in the data. The falsification had been discovered by fraud detection experts, Data Colada.[4]

I had wanted the original story to be true, that one could improve underwriting results in auto insurance with this one neat trick! But I was a credulous fool for believing that Just So Story.[5]

As I mentioned in my 2021 follow-up, I should have been a bit more distrustful, especially given that an insurance company supposedly gave up a very simple 9% profit boost. I should have known better.

But maybe I am not alone in being fooled, especially given how widespread the phenomenon of research trickery seems to be.

The Many Ways to Trick or Just be Wrong in Research

While I had focused on Dan Ariely in my two articles above, who wrote the specific book I was reviewing, the auto insurance research paper had multiple co-authors. One of the co-authors, Francesca Gino, attracted attention in her particular work by the group who detected the data alteration… and they found further alterations in data underlying other research papers.

It seems that data in this and other experiments had been altered to achieve desired results. Once those alterations were removed, the original interesting conclusions of the research papers turned into non-results.

Francesca Gino has filed a lawsuit against various parties as a result of these publications.[6] As a follow up, a piece in Science was published, noting what looks like extensive plagiarism by Gino.[7]

At this point it may look like a grudge match between Gino and a variety of outsiders, but it is not only Gino who has been swept up by various searchers for dodgy data in published research.

I often find these stories at the site Retraction Watch,[8] which specifically looks for stories of academic papers retracted from journals. The reason for retractions can be many, not only outright fraud.

Plagiarism cases abound, as well as questionable methodology, figure recycling (using the same figure in multiple papers which can indicate fraud of various types), paper mill activity (pumping out worthless papers in great volume), and more. My recent favorite was someone who used Excel’s autofill feature to just fill in for missing data,[9] which is not exactly a legitimate imputation method.[10]

Wrong results show up not only in published academic papers. In following mortality trends, I’ve found publicly available death statistics that were clearly wrong. In one case, it was an IBNR model with out-of-date assumptions.[11] In the other, it was errant population estimates throwing off death rate estimates.[12] In both cases, I followed up with the CDC to get the errors corrected or determine the process for how the errors would eventually be corrected.

What Can We Actuaries Do? Go Back to the Basics

Once upon a time, I thought that if something was written in a non-fiction book, it must be true. I came across Flim-Flam! and Fads and Fallacies and realized that I was wrong about that assumption. I also stopped trying to flip the light switch with my nonexistent telekinetic powers.

But I can still be fooled, and I expect I will be fooled again. That said, I need not be an easy mark.[13]

As actuaries, our best friends are our professional standards. In the U.S., the Actuarial Standards of Practice, particularly ASOPs 23 and 56, on Data Quality and Modeling, will be helpful here. Our standards give us guidance on the considerations in what we need to check with respect to the data we’re using for our models, and what aspects of our models we should check.

As I mentioned in “Keep Up With the Standards: On ASOP 56, Modeling” from April 2021, here is a handy checklist to review:

3.1 Does our model meet the intended purpose?

3.2 Do we understand the model, especially any weaknesses and limitations?

3.3 Are we relying on data or other information supplied by others?

3.4 Are we relying on models developed by others?

3.5 Are we relying on experts in the development of the model?

3.6 Have we evaluated and mitigated model risk?

3.7 Have we appropriately documented the model?

Items 3.2–3.6 all involve thinking through whether we may have issues with where our model departs from reality. If we are relying on other people’s models or data, there may be shortcomings in these models or data that we may not be aware of, whether they intentionally falsified work or not.

To discuss ASOP 23, on data quality, let me discuss a lighter, or rather, heavier, non-actuarial topic: sumo.

Know Your Data: Applied to Sumo

With respect to ASOP 23, we are not expected to do a forensic examination of our data sources. However, we are expected to do some reasonability checks, and to understand the nature of the data sets we are working with.

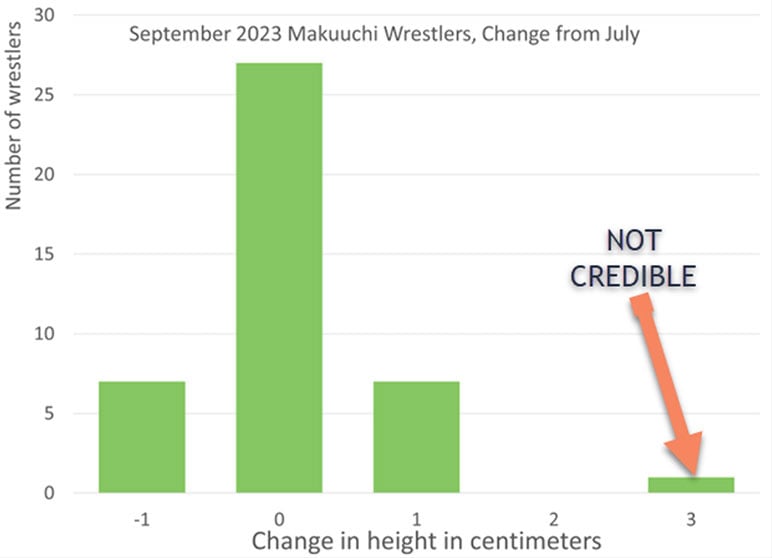

To step away from the seriousness of falsifying data about insurance applications, or, worse, about cancer research, let us consider the case of sumo statistics. I have kept a record of the height and weight of the top-level professional sumo wrestlers since 2022. In September 2023, I noticed an odd change: one wrestler’s height had increased in the records by 3 cm.[14] While sumo wrestlers do often start in the pro ranks as teenagers, they usually don’t get to the top level until well into their twenties. A change of one centimeter either way is pretty usual (they report only whole centimeter heights at the official website), but three centimeters?

The fandom was skeptical, and still is.

The wrestler who had this miraculous growth spurt, Midorifuji, had been the shortest wrestler until after the newly recorded height. Then he just edged out the next-shortest rikishi (sumo wrestler), Tobizaru. When these two are matched up, it sure seems like Tobizaru is the taller of the two.

Nothing much depends on the heights (or the weights). There are no size classes in professional sumo, by height or weight. This is just a set of statistics I like to track. I just noted I don’t believe the official statistics in my spreadsheets, and I moved on. If any modeling depended on it, I would investigate further.

Checking Where It Counts, and Following Up

That is different from the spurious death counts or the erroneous death rates in my data sets from the CDC. Those had material effects on my analyses, so I found contact information for people at the CDC. In the first case, I did not get a response, but the errors were fixed. In the second case, I got an answer as to how the process worked, so I learned more about the underlying data.

Many times, we are passive consumers of the data we receive, and we shouldn’t be. In the case of the notorious Excel auto-filler, it was a graduate student in the same field who reached out to the other academic researchers to find out what they did.[15] We are not required to hunt down the sources of all the erroneous data we come across, but when it is something material, consider finding the person or people who generated the error to get it fixed.

Perhaps we do have to think like tricksters ourselves to see where the data may be lying to us. In the case of erroneous data, I can usually find the process that led to the error. It’s usually not fraud, but an erroneous assumption that gets propagated through a system. In the case of the overestimated cancer deaths, it was the change in a reporting lag not yet reflected in an IBNR model.

With the entry of AI into this world awash in data, we have to be even more skeptical of the quality of the data we are working with. It does require being less credulous about what we are handed and actively engaging with the data and models provided by others and going back to our basic principles.

Statements of fact and opinions expressed herein are those of the individual authors and are not necessarily those of the Society of Actuaries, the editors, or the respective authors’ employers.

Mary Pat Campbell, FSA, MAAA, is vice president, Insurance Research at Conning Research & Consulting Inc. She can be reached at marypat.campbell@gmail.com or via LinkedIn.

Endnotes

[1] https://en.wikipedia.org/wiki/List_of_Dewey_Decimal_classes#Class_100_%E2%80%93_Philosophy_and_psychology

[2] Fox Mulder is a fictional FBI special agent from the TV series “The X-Files.”

[3] Parissa DJangi. "Harry Houdini’s unlikely last act? Taking on the occult." National Geographic, October 16, 2023, https://www.nationalgeographic.com/history/article/harry-houdini-skeptic-spirits-mediums-fraud.

[4] https://datacolada.org/109. The Data Colada team are Uri Simonsohn, Leif Nelson, and Joe Simmons.

[5] This phrase is a reference to Rudyard Kipling's 1902 Just So Stories, containing fictional and deliberately fanciful tales for children, in which the stories pretend to explain animal characteristics, such as the origin of the spots on the leopard.

[6] Rahem D. Hamid and Claire Yuan. “Embattled by Data Fraud Allegations, Business School Professor Francesca Gino Files Defamation Suit Against Harvard,” The Harvard Crimson, August 3, 2023, https://www.thecrimson.com/article/2023/8/3/hbs-prof-lawsuit-data-fraud-defamation/

[7] Cathleen O’Grady. “Embattled Harvard honesty professor accused of plagiarism,” Science, April 9, 2024, https://www.science.org/content/article/embattled-harvard-honesty-professor-accused-plagiarism.

[8] https://retractionwatch.com/

[9] Frederik Joelving. “Exclusive: Elsevier to retract paper by economist who failed to disclose data tinkering,” Retraction Watch, February 22, 2024, https://retractionwatch.com/2024/02/22/exclusive-elsevier-to-retract-paper-by-economist-who-failed-to-disclose-data-tinkering/.

[10] Gary Smith. “How (not) to deal with missing data: An economist’s take on a controversial study,” Retraction Watch, February 21, 2024, https://retractionwatch.com/2024/02/21/how-not-to-deal-with-missing-data-an-economists-take-on-a-controversial-study/.

[11] Mary Pat Campbell, “A Tale of Know Your Data: The Mystery of the Excess Connecticut Summer Deaths,” August 20, 2022, https://marypatcampbell.substack.com/p/a-tale-of-know-your-data-the-mystery.

[12] Mary Pat Campbell, “Geeking Out: In Death Rates, the Denominator Is Also Important,” March 3, 2024. https://marypatcampbell.substack.com/p/geeking-out-in-death-rates-the-denominator.

[13] An “easy mark” is carnival worker slang or “carny” slang for a gullible person who will be easily cheated out of money by carnival tricks. For this and other carny slang, check out the 2019 Ranker article by Jacob Shelton: “Carnival Worker Slang To Learn If You Don't Want To Be A Mark,” https://www.ranker.com/list/carnival-slang/jacob-shelton.

[14] Mary Pat Campbell “Sunday Sumo: Actuarial Standards and Sumo Stats,” September 3, 2023. https://marypatcampbell.substack.com/p/sunday-sumo-actuarial-standards-and.

[15] Frederick Joelving, “No data? No problem! Undisclosed tinkering in Excel behind economics paper,” Retraction Watch, February 5, 2024, https://retractionwatch.com/2024/02/05/no-data-no-problem-undisclosed-tinkering-in-excel-behind-economics-paper/.